AI Change Management for Small Nonprofits

The quick and easy way to successfully use AI in your small nonprofit

Quick View: The 5‑Stage AI Change Loop

1. Frame & Safeguard

o Define purpose and outcomes in plain language.

o Establish light‑touch AI guardrails (data privacy, equity, transparency).

o Pick a single Executive Sponsor and a Change Lead.

2. Spot & Score Use Cases

o Collect ideas from staff; score by impact, effort, risk, mission‑fit.

o Choose 1–2 tiny pilots.

3. Pilot & Measure

o Time‑boxed pilots with success metrics and a rollback plan.

o Capture prompts, workflows, and failure modes.

4. Adopt & Train

o Turn winning pilots into SOPs, training, and checklists.

o Update comms, budget, and roles.

5. Scale & Govern (Ongoing)

o Quarterly review of value, risks, and equity impacts.

o Add new use cases only when the last one is stable.

Rhythm:

Weekly 30‑minute AI Huddle + monthly 60‑minute Value & Risk Review. Keep change visible, small, and predictable.

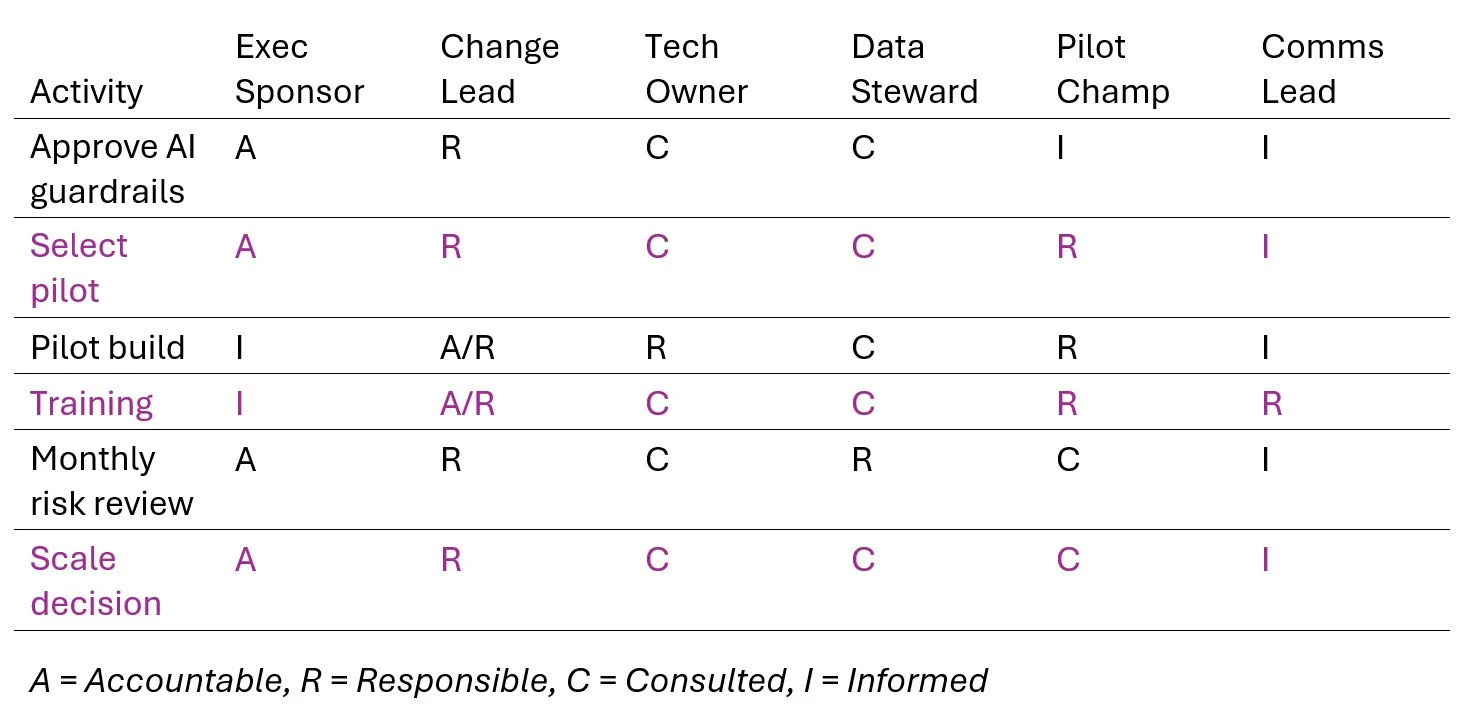

1) Roles & RACI

• Executive Sponsor — removes obstacles; approves policy and budget.

• Change Lead (MJ / Consultant) — facilitates the loop, coaching and measurement.

• Tech Owner — sets up tools; ensures security/permissions.

• Data Steward — minding sensitive data, retention, and sharing.

• Pilot Champion — frontline staff who co‑designs the pilot.

• Comms Lead — crafts messages for staff/board and gathers feedback.

RACI table (template)

Responsible (R) = the doers. They execute the task and produce the deliverable. You can have several Rs.

Accountable (A) = the owner. This person answers for the result, has decision/sign-off authority, and makes the call if there’s a conflict. There should be exactly one A per task.

If it goes sideways, who gets the phone call? That’s the A.

Who rolls up their sleeves? That’s R.

2) Light‑Touch AI Guardrails (copy/paste policy)

Purpose: Use AI to save staff time and improve mission impact while protecting people, data, and equity.

Allowed uses: drafting, summarizing, brainstorming, translation, data cleanup, accessibility (captions/alt text), and structured analysis with human review.

Not allowed: entering PII (personally identifiable information), health records, donor bank info, minors’ data, or legally protected details into public AI tools. No automated decisions affecting eligibility/services without human oversight.

Equity & harm checks: Before any rollout, run through the Ethics & Impact Checklist (below) and test with affected communities when feasible.

Transparency: Disclose AI assistance in external communications when meaningful. Staff always retain authorship responsibility.

Data: Use organization‑approved tools and shared drives; store prompts/SOPs in the knowledge base; follow retention rules.

Incident handling: If AI outputs harmful, biased, or private info, stop, screenshot, report to Change Lead and Data Steward, and log in the Risk Register.

3) Ethics & Impact Checklist

• Who benefits? Who could be harmed or excluded?

• Are we reinforcing bias in language, imagery, or datasets?

• Could outputs be misleading (“hallucinations”)? What’s our verification step?

• What data enters the tool? Any sensitive fields? Is training‑on‑your‑data disabled?

• What is the easiest way for someone to opt out or get a human review?

• What will we publicly disclose about AI use here?

• What metrics show equity is improving (or regressing)?

Green‑light rule: If any answer makes you uneasy and there’s no mitigation, pause and redesign the pilot.

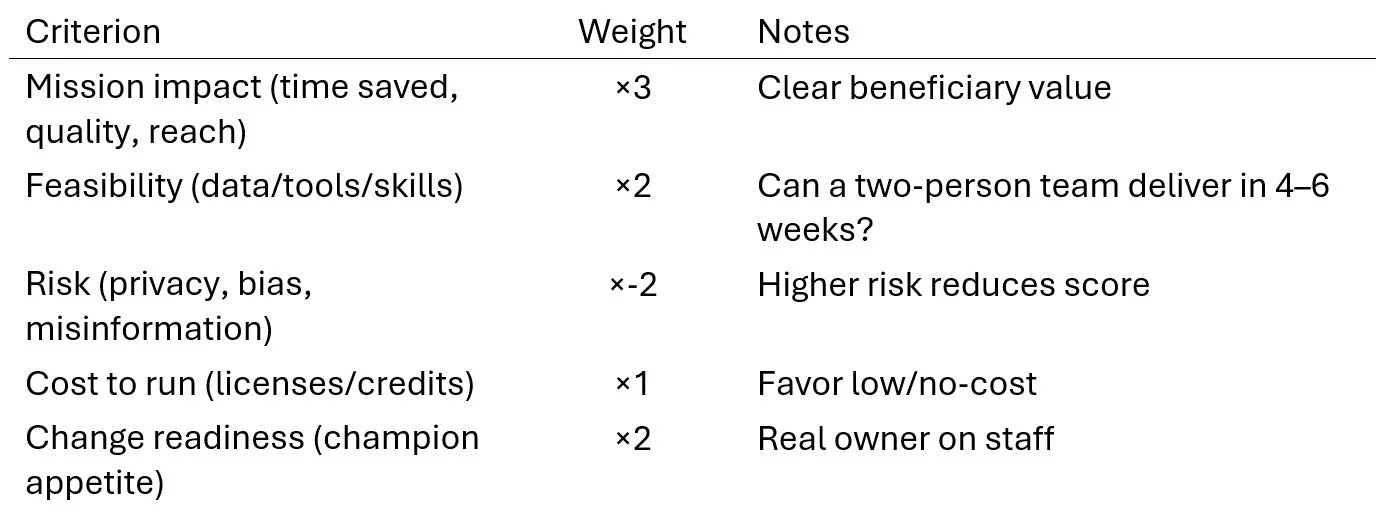

4) Use‑Case Scoring Card

Score 1–5 (5 is best) and sum.

Pick the top 1–2; everything else goes to the backlog.

5) 30‑Day Pilot Plan (template sample)

Pilot name: e.g., “Grant summary + first draft”

Goal: Save 6 hours per grant by generating a summary and a first draft using our own materials.

Scope: 3 grants max, one program area, English only.

Success metrics: - Time saved per grant (target ≥ 4 hours) - Reviewer accuracy score (target ≥ 90%) - Staff satisfaction (target ≥ 4/5)

Risks & mitigations:

Hallucinations → mandatory source citations and reviewer check

Sensitive data → use redacted docs; never paste donor PII

Tone mismatch → brand voice prompt + final human edit

Artifacts to capture: prompt set, screenshots, before/after time, edge cases, final SOP.

Rollback trigger: Any privacy breach or < 70% reviewer accuracy → halt and fix.

6) SOP Template (turn a win into policy)

Title: SOP – [Process]

Purpose: Why this exists and who benefits.

Inputs: What content or data is required and where it lives.

Steps: Numbered, minimal clicks. Include screenshots or a 2‑minute video.

Prompts: Paste tested prompts with variables in {braces}.

Quality check: Short review list (facts, tone, citations, bias scan).

Data care: Where files are stored, retention period, who can access.

Change log: Date, change, owner.

7) Communications Plan (snack‑size)

Kick‑off note (staff): Why we’re trying this, what’s in it for you, what will not change, how to give feedback.

Weekly update: What we tested, what worked, what failed, what’s next.

Board brief (monthly): Time saved, risks managed, decisions needed.

Celebrations: Shout‑outs for learning and cautious risk‑taking.

Tip: Keep messages short, human, and transparent. Avoid hype.

8) Measurement & Value Dashboard

(copy/paste metrics)

Time saved (hours/month)

Quality (reviewer accuracy %)

Adoption (# staff using SOP)

Risk (# incidents; time to resolve)

Equity (e.g., readability grade level, accessibility checks passed, language inclusivity score)

Satisfaction (staff 1–5; beneficiary feedback snippets)

Update monthly; stop measuring once stable; measure the next pilot.

9) Tools That Work on Scrappy Budgets

Work management: ClickUp or Asana (free/discounted nonprofit tiers)

Automation: Make.com (start small; add error notifications)

Docs/knowledge: Google Drive + a shared Prompt Library doc

Forms/feedback: Google Forms or Tally

Training: Loom (short videos), Scribe (auto step‑by‑steps)

Ethics check: Data Ethics Canvas (mirror it as a 1‑pager in your Drive)

Risk register: Simple Google Sheet using the template below

Comms: Slack/Teams #ai‑pilot channel, pinned SOPs & wins

Pick one of each. Consistency beats variety. These are just recommendations; use what you already have if it’s working.

10) Templates (ready to copy)

A) Risk Register (Google Sheet structure)

ID

Date

Use Case

Risk

Likelihood (L/M/H)

Impact (L/M/H)

Owner

Mitigation

Status

B) AI Decision Log (governance trail)

Date

Decision

Context

Options considered

Final choice

Who decided

Review date

C) Stakeholder Map

Directly affected (frontline)

Indirectly affected (programs, dev, comms)

Oversight (leadership, board)

Community partners / beneficiaries

For each: worries, wins, what they need to hear, best channel.

D) ADKAR‑in‑Practice Checklist

Awareness: 1‑page “why now” brief sent? Q&A session held?

Desire: What pain are we solving for staff? Who asked for this?

Knowledge: 10‑minute micro‑training + 2‑minute video recorded?

Ability: Did two people do the task end‑to‑end without help?

Reinforcement: Celebrated? Added to job descriptions? Checked in 30 days?

E) AI Use‑Case Intake Form (short)

What problem are you trying to solve? (2–3 sentences)

Who benefits? (internal/external)

What materials exist? (docs, datasets)

What does success look like? (time saved, quality)

Any privacy or equity concerns you already see?

F) 10‑10‑10 Pilot Blitz

10 minutes: Pitch the idea and success metric.

10 days: Build the smallest thing that could work.

10 people: Get feedback from a cross‑section.

11) Example Starter Pilots for Small Nonprofits

Grant kit builder: Generate a grant summary & first‑draft narrative from prior proposals and annual report language.

Meeting notes → tasks: Record, summarize, and auto‑create ClickUp tasks with owners and due dates.

Website accessibility sweep: AI‑assisted alt text and reading‑level check for the top 20 pages.

Donor thank‑you variants: Draft personalized thank‑you copy by segment, with human review.

Program report tidy‑up: Clean and standardize monthly program notes into a board‑ready brief.

Each pilot should have: a champion, a metric, a reviewer, and a risk plan.

12) 90‑Day Roadmap (example)

Month 1: Guardrails + intake + pick 2 pilots. Train champions.

Month 2: Run pilots. Weekly huddles. Capture SOPs.

Month 3: Adopt one pilot org‑wide. Present value to board. Decide on next 1–2 pilots.

Quarterly: Value & Risk Review; archive dead pilots; refresh training.

13) Board‑Friendly Summary (example)

Why now: Staff are overwhelmed; funders expect efficiency and data‑driven stories.

Our approach: Tiny, safe pilots → adopt what works → scale carefully. Humans stay in charge.

Safeguards: No sensitive data in public AI; human review; equity checks; monthly oversight.

Value to date: [fill after pilots] hours saved, quality gains, risk incidents handled.

Ask: Endorse policy, celebrate small wins, and fund 1–2 next pilots.

14) Frequently Asked Skeptical Questions (and sane answers)

Will AI replace staff? No. It replaces tedium. People do relationship, judgment, and accountability.

Isn’t AI biased? Yes—like people. That’s why we run ethics checks, test with real users, and keep a human in the loop.

Do we have enough data? For many use cases (writing, summarizing, translation), yes. Start with content you already own.

What if it makes things up? We require citations/verification and design prompts that reference your own materials.

Isn’t this a distraction? Only if we skip measurement. We adopt only what clearly saves time or improves outcomes.

15) Where This Starter Kit Meets Real Standards (FYI)

Aligns to ADKAR at the person level (Awareness → Reinforcement)

Mirrors NIST AI RMF functions at the org level (Govern, Map, Measure, Manage)

Uses a lightweight Ethics Canvas approach for equity and harm checks

ADKAR

ADKAR is a simple but powerful change management model developed by Prosci. It’s a way of understanding and guiding how individuals move through change. When applied to AI adoption in nonprofits, it helps ensure staff don’t just hear about AI but actually embrace and sustain it.

Here’s how it maps in the AI context:

A – Awareness

People need to know why AI is being introduced.

Example: Staff understand that AI pilots are about saving time on grant writing or improving accessibility, not cutting jobs.

D – Desire

People need to want to participate in the change.

Example: A program officer sees that AI can reduce reporting drudgery, freeing them to spend more time with community partners.

K – Knowledge

People need training on how to use AI safely and effectively.

Example: Quick Loom videos or workshops on writing effective prompts, data privacy guardrails, and when human review is required.

A – Ability

They need practice and support to actually do it.

Example: Staff run a 30-day AI pilot with a champion guiding them, prompts written down, and a simple SOP to follow.

R – Reinforcement

The organization needs to lock in the new habits so the change sticks.

Example: Celebrate time saved at staff meetings, update job descriptions to include “using AI SOPs,” and check adoption in quarterly reviews.

Why ADKAR matters for AI in nonprofits

Nonprofits are small and overstretched—people already wear multiple hats. Without Desire and Reinforcement, AI pilots fizzle out.

AI is disruptive and a little scary—Awareness and Knowledge steps reduce fear and misinformation.

It keeps adoption human-centered—you’re not just installing software; you’re changing how people work day-to-day.

In short: ADKAR is the human side of your AI pilots. It turns “we tried AI once” into “we’ve built new, sustainable workflows.”

ADKAR Checklist for AI Pilots

A – Awareness (Do we understand why?)

□ Have we explained clearly why AI is being piloted?

□ Do staff understand what problem AI is solving (time, quality, accessibility)?

□ Have we clarified what AI won’t replace (e.g., human judgment, relationship work)?

□ Has leadership endorsed the purpose and scope in plain language?

D – Desire (Do we want to take part?)

□ Have we identified a real staff pain point this pilot could ease?

□ Is there a visible “champion” who’s excited to try it?

□ Have we invited volunteers to test instead of forcing adoption?

□ Did we ask: What’s in it for staff, programs, and beneficiaries?

K – Knowledge (Do we know how?)

□ Did we provide a short training (e.g., 10–15 min video or demo)?

□ Are prompts and guardrails documented in a shared place?

□ Do staff know what not to do (e.g., never paste PII or donor data)?

□ Is there a clear “how-to” SOP or checklist to follow step by step?

A – Ability (Can we actually do it?)

□ Have 1–2 people completed the pilot task end-to-end without help?

□ Did we test the workflow with real documents, not just theory?

□ Are support channels clear (who to call when it breaks)?

□ Do we have a rollback plan if results are inaccurate or risky?

R – Reinforcement (Will it stick?)

□ Have we celebrated early wins (time saved, improved output)?

□ Is the new workflow added to job descriptions or SOPs?

□ Do we have a monthly adoption check-in?

□ Have we tied AI use to mission impact when reporting to the board/funders?

Tip: Run through this checklist at the start and end of every AI pilot. If more than 3 boxes are unchecked in a column, don’t scale the pilot yet.

NIST AI RMF

NIST AI RMF stands for the National Institute of Standards and Technology Artificial Intelligence Risk Management Framework. It’s a U.S. government–developed, voluntary guide designed to help organizations use AI responsibly, manage risks, and build trust.

Purpose

It’s not about telling you what AI you can or can’t use.

It’s about helping you identify, measure, manage, and govern risks—things like bias, security, explainability, reliability, and data privacy.

The goal is to increase trustworthiness of AI systems without killing innovation.

Structure

The framework is organized around four core functions:

Govern – Build the right policies, roles, and oversight into your organization.

Map – Understand the AI system’s context, intended use, and potential harms/benefits.

Measure – Use tools, tests, and metrics to assess AI performance, fairness, security, and trustworthiness.

Manage – Act on those assessments: mitigate risks, monitor ongoing performance, and communicate clearly with stakeholders.

Why it matters for nonprofits

Even small nonprofits can borrow its lightweight language of risk: identify who might be harmed, what data is sensitive, and how to handle transparency.

Funders and partners increasingly like to see that you’re not just “playing with shiny AI toys” but have a framework, even if simplified.

The RMF comes with a playbook and companion documents you can adapt into checklists and policies.

You can download it free from the NIST website. They also publish case studies and a “playbook” with sample actions, which makes it more practical.

Ethics Canvas

An Ethics Canvas is a visual tool—kind of like a business model canvas, but for ethics—that helps teams spot risks, values, and impacts when they’re designing or deploying technology. It was developed by the Open Data Institute (ODI) and is used widely in data science, AI, and digital projects.

What it looks like

It’s usually a one-page worksheet divided into boxes, each box prompting you with a question such as:

Stakeholders: Who gains? Who might lose?

Impacts: What good could this create? What harms could it cause?

Values: What principles do we want to uphold?

Risks & mitigations: What could go wrong, and how will we handle it?

Transparency: How will we communicate choices and trade-offs?

Teams fill it in together—like a whiteboard exercise—so ethical reflection is baked into the design process, not bolted on at the end.

Why it benefits nonprofits

Simple and visual: no legalese or philosophy degree required.

Collaborative: staff, board, and even community members can weigh in.

Flexible: can be used for grant-writing, service design, AI pilots, or data-sharing agreements.

Documentation: the finished canvas is a record showing you thought about equity, fairness, and safety.

Short Example in AI change management

Suppose you’re piloting an AI tool for summarizing grant reports:

Stakeholders = program staff, funders, beneficiaries.

Impacts = saves time for staff, but risk of oversimplifying nuance.

Values = accuracy, transparency, inclusion.

Mitigation = require human review and add a disclosure note for funders.

You walk away with a quick but structured ethical profile of the pilot.

Sample Ethics Canvas Template

Project Name: ____________________

Date: ____________________

Facilitator: ____________________

1. Stakeholders

Who directly benefits?

Who might be harmed or excluded?

Who needs to be consulted?

2. Intended Benefits

What good do we hope to achieve?

How does this align with our mission?

3. Potential Harms

What could go wrong?

Who could be negatively affected?

4. Values & Principles

What values should guide us (e.g., fairness, transparency, equity, privacy)?

Are there conflicts between these values?

5. Data & Inputs

What data will we use?

Is any of it sensitive, personal, or protected?

How will we minimize risks of misuse?

6. Transparency

What do we need to disclose to staff, beneficiaries, or funders?

How do we explain decisions made with AI or data?

7. Accountability

Who is responsible for reviewing risks and approving use?

How will we handle mistakes or incidents?

8. Mitigations

What safeguards will we put in place?

How will we monitor outcomes over time?

9. Community Input

How will we include voices of those most affected?

What feedback loops are in place?

10. Next Steps

What actions will we take before launch?

What needs to be reviewed or signed off?

Reminder: This canvas is a living document. Update it at each pilot stage and archive final versions for transparency.

Final word

Start small, show value, keep people at the center. Then dig into the next pilot once the last one is boringly reliable.

YOUR CALL TO ACTION: If you are one who likes sharing (hello extroverts), CLICK HERE and tell me what you thought of this blog post. Have you implemented AI Change Management in your organization?

This article was a collaboration between MJ & AI.